The Meta Calls For Standardized Labeling Of AI-Generated Visual Content

- February 12, 2024

- allix

- AI in Business

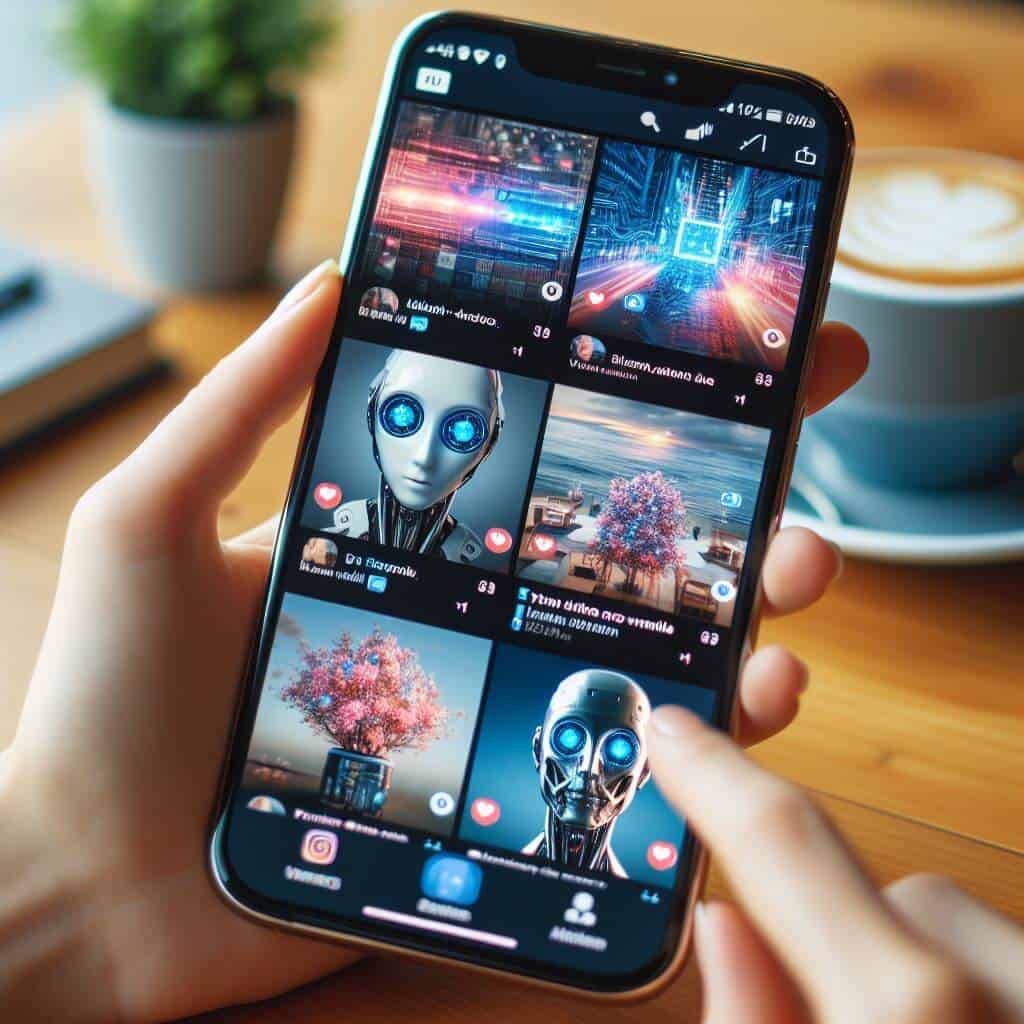

On Tuesday, Meta announced a collaboration with other technology companies to develop standards that will enable advanced detection and labeling of AI-generated images shared by a large user base.

The Silicon Valley-based tech giant expects to be ready to roll out a system on its platforms — Facebook, Instagram, and Threads — to accurately identify and tag AI-generated visuals within months. With upcoming elections in various countries that account for half of the world’s population, platforms like Meta feel an urgency to monitor AI-generated content due to concerns about the increased spread of misinformation by malicious actors. “This technology needs further development and it won’t cover everything, but it’s a start,” Nick Clegg, the company’s head of international affairs, told AFP in an interview.

Since December, Meta has marked images captured by its AI tools with visible and hidden indicators. However, Meta is looking to expand these efforts by partnering with outside companies to increase user awareness of such content, Clegg shared. Meta mentioned in one of their blog updates that they are looking to establish universal technical standards with industry peers that would signal when AI has had a hand in creating a piece of content. These efforts will involve engagement with organizations that Meta has previously worked with on AI recommendations. These partners include industry leaders such as OpenAI, Google, Microsoft, and Midjourney.

But as Clegg pointed out, while there is some progress in embedding “signals” into AI-generated images, the practice of tagging AI-generated audio or video has not progressed as quickly in the industry. While acknowledging that invisible tagging won’t completely eradicate the threat of fraudulent images, Clegg believes it will significantly reduce the distribution of such content as far as current technology allows.

Meanwhile, Meta encourages users to be skeptical of online content, assessing the reliability of sources and scrutinizing details that may seem contrived. In particular, high-ranking individuals and women have been affected by the realistic but false manipulations known as “deep fakes”. A notable case involved fake nude images of mega-pop star Taylor Swift that went viral on the platform formerly known as Twitter.

The development of AI tools capable of generating content has raised concerns about possible abuse, such as using ChatGPT for political upheaval through disinformation or duplicate AI. Just last month, OpenAI announced a ban on the use of its technology by political figures or organizations. Meta insists that advertisers be transparent about any AI involvement in the creation or editing of both visual and audio content in political ads.

Categories

- AI Education (39)

- AI in Business (65)

- AI Projects (87)

- Research (79)

- Uncategorized (3)

Other posts

- Advanced Cancer & Blood Disease Treatment Abroad: Hope, Expertise, and Global Care 🌍🩺

- Healthcare in the UAE: Premium Treatment, World-Class Clinics, and No Waiting Time

- Neck Liposuction Abroad: Say Goodbye to Double Chin, Hello to Defined Confidence

- Dental Veneers Abroad: Get Your Dream Smile for Less

- Endoscopic Brain Surgery Abroad: Safe, Affordable & Advanced Options You Can Trust

- Say Goodbye to Unwanted Moles: Discover Safe, Affordable Mole Removal Abroad

- Mastoidectomy Abroad: Affordable, Safe, and Life-Changing Ear Surgery

- Buccal Fat Extraction: Sculpt Your Dream Look with the Experts at Best Clinic Abroad

- Get Fast Relief from Spinal Fractures: Kyphoplasty Surgery Abroad with BestClinicAbroad

- Curious About Genetic Testing for Fertility? Here’s What You Need to Know (and Why People Trust Best Clinic Abroad to Book It)

Newsletter

Get regular updates on data science, artificial intelligence, machine