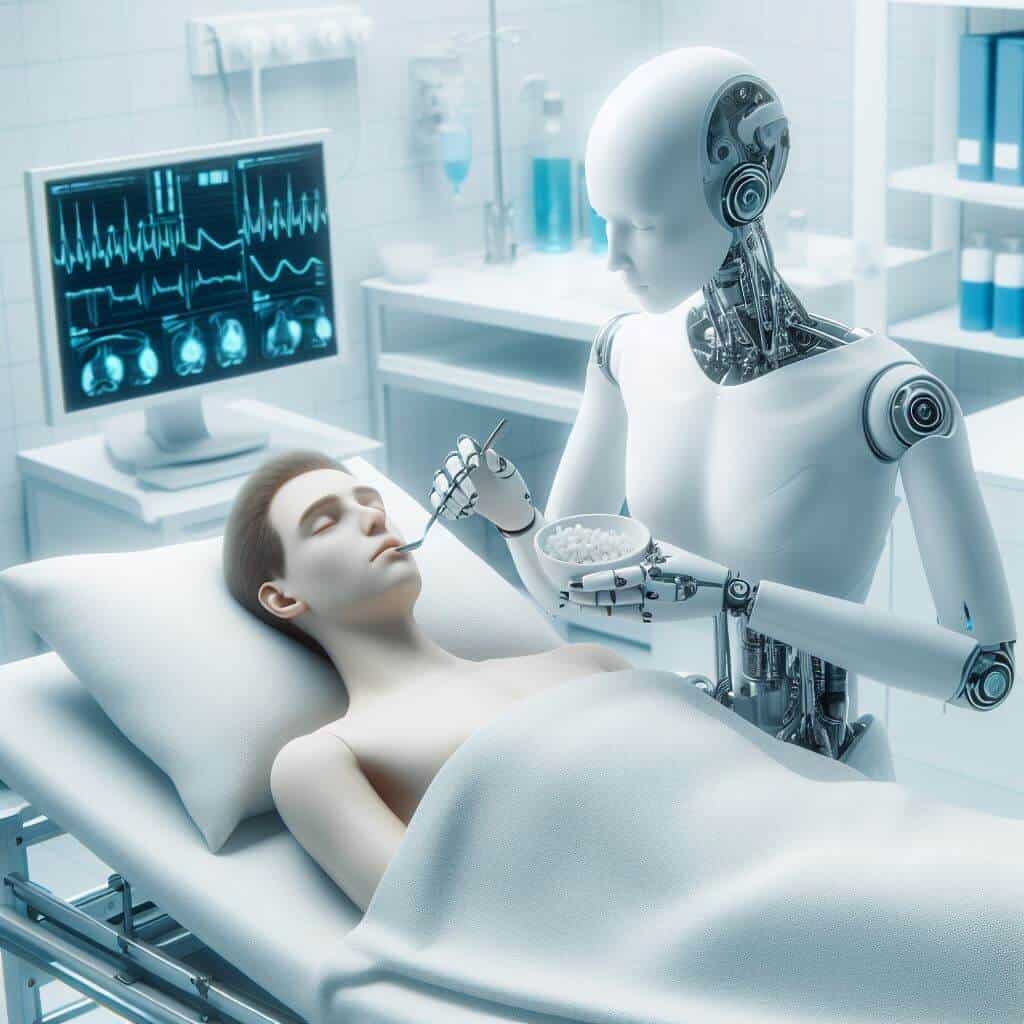

An Advanced Robotic Feeding Device Helps People With Significant Mobility Issues

- May 10, 2024

- allix

- AI Projects

Researchers at Cornell University have developed an innovative automatic feeding device designed to help people with severe mobility problems, such as those suffering from spinal cord injuries, cerebral palsy, and multiple sclerosis. This advanced system incorporates machine learning, computer vision, and multi-sensors to provide safe meals to those who cannot feed themselves.

“Operating a robotic feeder for people with significant mobility limitations is challenging because these people often cannot move forward to meet the spoon and need the food to be delivered directly to the mouth,” explained Tapomayukh “Tapo” Bhattacharjee, associate professor of computer users of Science in Cornell’s Ann S. Bowers College of Computer and Information Sciences and lead developer of the project. “The task becomes even more difficult when dealing with other complex diseases.”

The breakthrough work was detailed in their paper, “Feel the Bite: Intraoral Bite Transmission by Robot Using Reliable Mouth Sensing and Physical Interaction-Aware Control,” which they presented at the Human-Robot Interactions conference in Boulder, Colorado, on March 11-14. The paper received an honorable mention for best article, and their broader demonstration of robotic feeding won the award for best demonstration.

Bhattacharjee, who heads the EmPRISE lab at Cornell University, has made significant efforts to enable machines to mimic human eating, an extremely complex activity. The robot’s training includes steps from recognizing food on a plate to accurately placing it in a human’s mouth. “The last movement, just five centimeters from the bowl to the mouth, is extremely difficult,” Bhattacharjee said.

He noted that some people may have a very limited ability to open their mouths, perhaps as little as 2 centimeters, while others may deal with sudden involuntary muscle spasms. In addition, some users control the feeding process by maneuvering the dish with their tongues to place the food exactly where they can control it. “Our current technology makes initial assumptions about the user’s position that may not hold throughout the feeding process, limiting its effectiveness,” said Rajat Kumar Jenamani, the paper’s lead author and a doctoral student in computer science.

To overcome these obstacles, the team enhanced their robot with two key features: adaptive real-time mouth tracking and an interactive response system that detects and adjusts to physical interaction in real time. This innovation distinguishes between unexpected spasms, deliberate bites, or human attempts to control the utensil, offering a subtle interaction with the user. During trials with 13 participants in three different locations, including the EmPRISE lab, a medical center in New York, and a private home in Connecticut, the robotic system provided safe and comfortable feeding.

“This is one of the most thorough studies of an autonomous robotic feeding device in real-world conditions,” added Bhattacharjee. Equipped with a robotic arm equipped with a special sensor, the device uses dual-camera tracking to refine the perception of the user’s mouth, compensating for any potential visual blocks.

Jenamani underscored the emotional resonance of their field research, sharing the touching moment when the parents of a young girl with tetraplegic schizencephaly watched her successfully feed with the system for the first time, which elicited profound emotional responses. Looking ahead, although more research is needed to determine long-term suitability, promising results indicate significant potential to improve autonomy and quality of life for people with severe mobility impairments. “It’s amazing and extremely rewarding,” Bhattacharjee said of the project’s impact.

Categories

- AI Education (37)

- AI in Business (62)

- AI Projects (85)

- Research (57)

Other posts

- The Complete List of 28 US AI Startups to Earn Over $100 Million in 2024

- Keras Model

- Scientists Develop AI Solution to Prevent Power Outages

- NBC Introduces AI-Powered Legendary Broadcaster for Olympic Games

- Runway Introduces Video AI Gen-3

- Horovod – Distributed Deep Learning with TensorFlow and PyTorch

- Research Reveals Contradictory Positions Of AI Models On Controversial Issues

- Using AI to Understand Dog Barking

- The Autobiographer App Uses Artificial Intelligence To Record Your Life Story

- Chainer – Dynamic Neural Networks For Efficient Deep Learning

Newsletter

Get regular updates on data science, artificial intelligence, machine