Leveraging Azure Machine Learning for Advanced App Development

- January 12, 2024

- allix

- AI Education

The advent of cloud computing has radically transformed how businesses approach application development. Among the plethora of services that cloud platforms offer, machine learning (ML) capabilities stand out for their potential to bring about intelligent solutions that can self-improve and understand complex patterns. Microsoft’s Azure ML framework is at the forefront of this innovation, offering tools and resources for developers to construct sophisticated applications with embedded predictive analytics and AI services.

Understanding the Azure ML

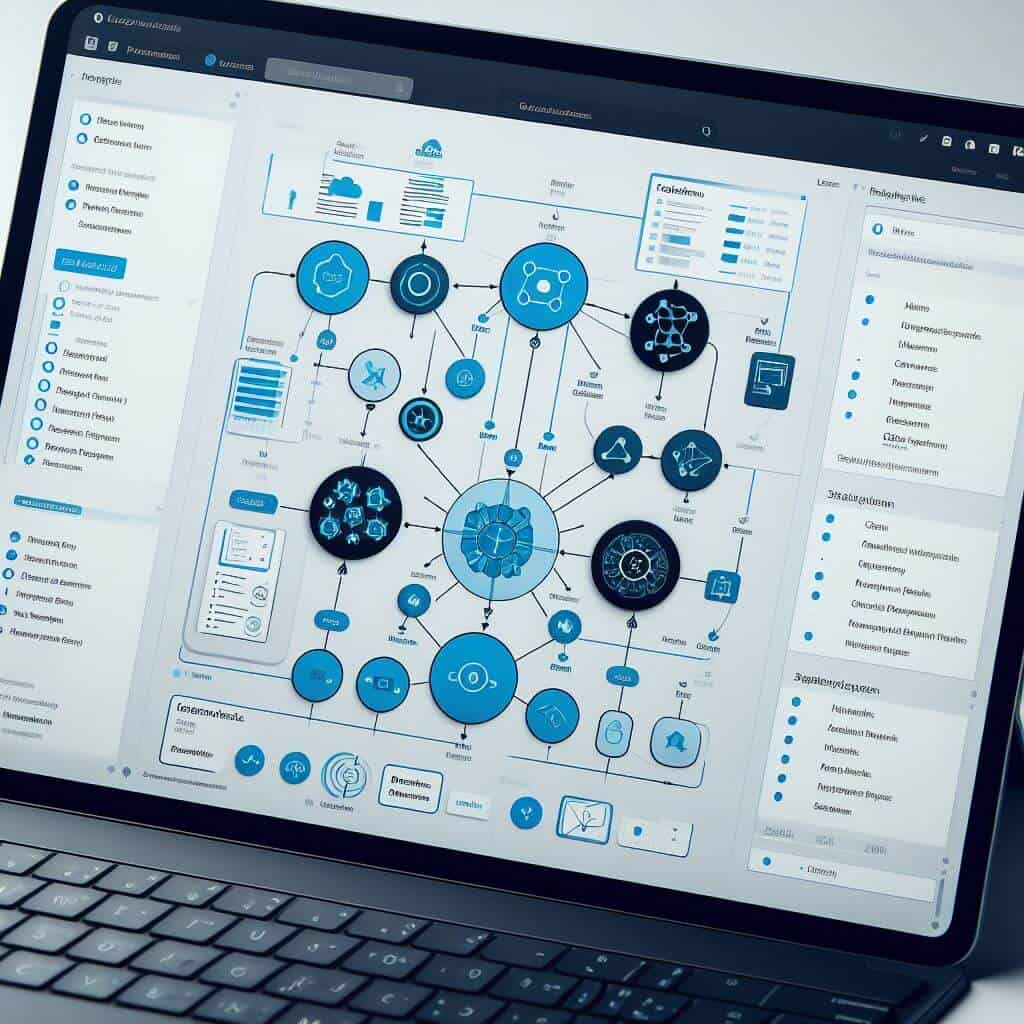

At the core of designing innovative solutions in the tech landscape is the powerful and dynamic ecosystem of Microsoft Azure Machine Learning. This robust framework stands as a testament to Microsoft’s commitment to democratizing artificial intelligence and machine learning, offering an array of services, tools, and infrastructures that are vital for building, deploying, and managing ML models at scale.

The Azure ML framework is ingeniously designed to facilitate the needs of diverse user groups. Data scientists, ML professionals, and developers regardless of their expertise in machine learning, find a set of tools tailored to their proficiency levels. From visual interfaces to coding environments, Azure ML ensures that intelligent application development is accessible to all.

When a user steps into the Azure ML workspace, they enter a realm dedicated to machine learning projects. This space is tailored for machine learning operations, often abbreviated as MLOps, ensuring systematic control over every stage of the machine learning project lifecycle. Here, a blend of storage options, powerful computing resources, and collaborative tools come together, forming an ecosystem that harmoniously manages machine learning workflows.

The seamless integration of experimentation services within the workspace is pivotal. It provides a testing ground for developing and honing ML models, facilitating iterative improvement through trial and error in a controlled environment. The pipeline architectures provided allow for sustainable model development and help reduce errors through continuous integration and delivery practices.

Deeply integrated into the ecosystem is Azure ML Studio. This intuitive visual tool allows developers and data scientists to build, test, and deploy machine learning models without writing a single line of code. By simply dragging and dropping dataset modules and analysis services, users can create ML models suited to their specific requirements. The studio appeals to those looking for rapid prototyping or who may not have deep coding expertise, thus bridging the gap between concept and implementation.

For the more seasoned professionals, the Azure ML service shines with its support for a multitude of open-source tools and platforms. This includes Jupyter Notebooks, which are popular among data science professionals for interactive computing and data visualization. The comprehensive Azure ML software development kits (SDKs) for Python and R are also vital, offering the flexibility to work in a preferred programming language while having direct access to Azure ML’s rich set of features.

Storage and dataset management within Azure ML uphold the integrity and security of data, which is the linchpin of any machine learning project. Azure’s robust security measures protect sensitive information while facilitating shared access amongst team members where necessary. Datasets can be version-controlled, ensuring that the right data is used during model training and reducing the potential for errors that could arise from data discrepancies.

The framework is also cognizant of the need for varied scaling options when it comes to computation. Providing compute targets that range from small-scale to massive heavy-duty clusters, Azure ML tailors resources appropriate to the task’s computational demands. For example, one can easily scale from development to production environments using Azure Kubernetes Service (AKS). This means that as machine learning models grow in complexity and data volume, the framework ensures a smooth transition without the need for significant architectural changes.

Streamlining the Development Process with Azure ML

Developing intelligent applications is a nuanced process that entails a series of intricate steps, often referred to as the machine learning lifecycle. This lifecycle includes data preparation, model training and evaluation, model deployment, and ongoing model management and monitoring. Azure Machine Learning (Azure ML) excels in streamlining this lifecycle, providing a cohesive and efficient framework that empowers developers to focus on innovation rather than getting bogged down by the complexity of underlying processes.

The crowning achievement in Azure ML’s approach to simplifying the development process is its automated machine learning (AutoML) feature. Embodying the essence of efficiency, AutoML revolutionizes the model development stage by automatically analyzing data patterns and selecting the most appropriate algorithms. This automation leads to a significant reduction in the time and expertise required for model selection and tuning, which are traditionally knowledge-intensive and time-consuming stages of machine learning. AutoML thereby enables developers with varying levels of ML expertise to deliver high-quality models without becoming entrenched in the minutiae of algorithmic complexity.

Azure ML also recognizes that the computational demands of ML can be formidable, as model training, especially with large datasets or complex neural networks, requires substantial processing power. To meet these varying needs, Azure ML offers a suite of scalable compute resources known as Azure Machine Learning Compute. Through this suite, developers can effortlessly scale up or down the computational resources allocated to their machine-learning workloads. This elasticity not only optimizes costs but also ensures that resources are most effectively utilized, whether for running numerous small jobs in parallel or for undertaking extensive deep-learning tasks.

To enhance the model development process, Azure ML provides seamless versioning and tracking features. Every step of the machine learning process, from initial data sets to various iterations of the models, is tracked and version-controlled within the Azure ML workspace. This approach to versioning is crucial as it lends reproducibility and traceability to the ML workflows. Consequently, developers can roll back to earlier models or datasets, compare the performance of different versions, and systematically refine their machine-learning applications with confidence.

Once the model has been trained and evaluated, it’s equally important to transition it smoothly into a production environment where it can start delivering value. Azure ML facilitates a smooth deployment by providing a model registry that stores trained models along with their version info and associated metadata. From the registry, models can be deployed directly to various Azure services or edge devices, fostering a consistent deployment experience. Deployment pipelines can further be automated, which typically involves model validation and automated retraining if the model performance degrades or if new data becomes available. These pipelines ensure that the deployed models are continuously integrated and updated without manual intervention, leading to more dynamic and responsive application performance.

Monitoring and logging are integral to Azure ML, providing transparency and oversight once ML models are live and interfacing with real-world data. This real-time monitoring capability is indispensable for maintaining the integrity of the application, allowing developers to detect and address any performance issues, drifts in data, or anomalies that might affect model accuracy or user experience. Azure ML captures a wide array of metrics and logs model behavior, which can be reviewed to understand and improve model performance over time. The insights gleaned from this ongoing analysis are essential for ensuring that applications remain robust and future-proof.

Seamless Integration within Azure and Beyond

Integrating machine learning models into the broader application stack is just as crucial as developing the models themselves. Azure ML does not exist in a vacuum; it thrives within the vast Azure ecosystem that encompasses a wide array of cloud services, each capable of further enhancing the capabilities of an intelligent application. The native integration points within Azure ensure that developers and businesses can effortlessly synergize their ML models with other functionalities, spinning up comprehensive solutions that are both dynamic and robust.

The framework’s connectivity with other Azure services is pivotal in concocting a unified and seamless environment. For instance, once a machine learning model is trained and ready to be deployed, Azure ML can channel the model to Azure Functions for seamless serverless computing capabilities. This means that applications can scale on demand, responding to events and triggers with precise ML-driven actions while managing resource consumption efficiently. Similarly, service integration with Azure IoT Hub opens a realm of possibilities for intelligent applications that interact with the Internet of Things ecosystem. It allows for models to be applied to live data streaming from sensors and devices, making for smarter analytics and real-time decision-making processes in applications ranging from predictive maintenance to customer engagement.

For applications that require a rich set of AI capabilities without the need for custom-developed models, Azure ML dovetails with Azure Cognitive Services. This service provides off-the-shelf AI features, such as vision, language, and speech, which can be effortlessly combined with bespoke Azure ML models to create multifaceted intelligent solutions. For example, an application could leverage a custom ML model for complex data analysis while also employing Cognitive Services for translating text or analyzing sentiment, streamlining how intelligence is layered into the application.

Collaboration is a foundational aspect of innovation, and Azure ML is no exception to this rule. It is designed from the ground up to facilitate teamwork. With options for shared workspaces, concurrent access to datasets, and multi-user pipelines, Azure ML fosters a collaborative development environment. Version control is also deeply embedded into the Azure ML repositories, ensuring that contributions from multiple team members are tracked and integrated systematically, reducing conflicts and improving efficiency.

Despite the comprehensive integration within the Azure platform, Azure ML acknowledges the larger technology ecosystem’s diversity and embraces it. Its machine learning services are agnostic to tools and frameworks, offering first-class support for popular open-source libraries such as TensorFlow, PyTorch, and scikit-learn. By doing so, it acknowledges the preferences of the developer community and allows for a smooth transition of existing projects or expertise into the Azure ML space, without compelling developers to forgo their tools of choice.

The convergence of Azure ML with open-source frameworks illustrates the commitment to interoperability and prevents vendor lock-in—a significant consideration for businesses wary of being tethered to a single provider. This open approach is evidence of Microsoft’s recognition of the importance of adaptability and integration in today’s tech milieu, where applications often span multiple platforms and systems. Developers can seamlessly integrate their ML workflows with existing CI/CD pipelines, or leverage Azure DevOps for end-to-end project management, further knitting Azure ML into the developer’s ecosystem.

Categories

- AI Education (37)

- AI in Business (62)

- AI Projects (85)

- Research (57)

Other posts

- The Complete List of 28 US AI Startups to Earn Over $100 Million in 2024

- Keras Model

- Scientists Develop AI Solution to Prevent Power Outages

- NBC Introduces AI-Powered Legendary Broadcaster for Olympic Games

- Runway Introduces Video AI Gen-3

- Horovod – Distributed Deep Learning with TensorFlow and PyTorch

- Research Reveals Contradictory Positions Of AI Models On Controversial Issues

- Using AI to Understand Dog Barking

- The Autobiographer App Uses Artificial Intelligence To Record Your Life Story

- Chainer – Dynamic Neural Networks For Efficient Deep Learning

Newsletter

Get regular updates on data science, artificial intelligence, machine