Reinforcement Learning with OpenAI Gym

- February 8, 2024

- allix

- AI Education

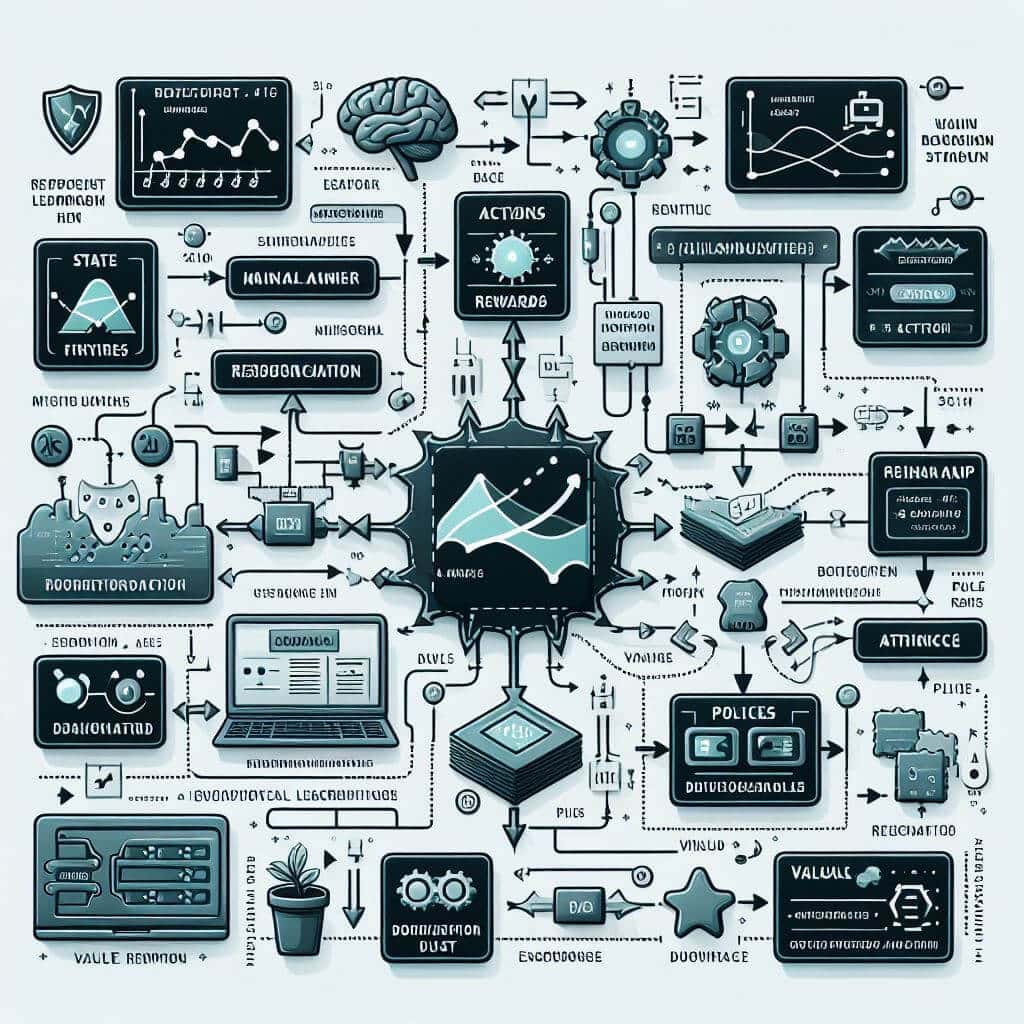

Reinforcement learning (RL) has been brought to the forefront of artificial intelligence research due to its ability to solve complex problems involving a sequence of decisions. This methodology allows computers to learn from the consequences of their actions and adjust strategies to maximize rewards. But what engine drives this advanced domain? Tools like OpenAI Gym offer RL agents a well-known platform to train, learn, and improve their decision-making skills.

Understanding OpenAI Gym

OpenAI Gym positions itself as a central tool for both beginners and experts in the field of artificial intelligence. Its key role in the development of reinforcement learning is explained by the large set of environments that simulate a wide range of tasks and problems. These environments provide developers with a standardized way to measure and compare the performance of their RL algorithms. But what sets OpenAI Gym apart is its user-friendly design, which abstracts away many of the complexities traditionally associated with setting up a reinforcement learning simulation.

The OpenAI Gym environment comes in many shapes and sizes, each tailored to different learning goals and levels of difficulty. The platform includes everything from simple text-based environments to more complex ones with multi-dimensional visual inputs, such as those required for video game agents. This diversity is important when it comes to pushing the limits of RL algorithms, offering researchers and hobbyists the opportunity to test their models against both classical control theory problems and modern challenges.

The OpenAI Gym API allows users to interact with these environments using a small set of clear and concise commands. An agent in any given environment perceives its environment through observations, performs actions that affect the state, and receives rewards—signals that help guide the agent’s learning process. For AI developers, this process is simplified to writing a loop where the agent sequentially observes the state, makes decisions, performs actions, and optimizes its behavior based on feedback from the environment.

By creating a single interface for these diverse environments, OpenAI Gym provides a seamless transition from simple to more complex scenarios. Users are spared the tedium of learning new interaction rules every time they change a project, which dramatically lowers the barrier to entry into the world of reinforcement learning and encourages experimentation.

For those who want to dive deeper, OpenAI Gym also supports custom environments. Users can design their unique settings and tasks according to their specific research needs or interests. This setup opens the door to endless problem-solving possibilities, allowing the platform to grow and evolve with the creative and scientific discoveries of its community.

From a practical perspective, OpenAI Gym’s open-source nature allows for constant updates and improvements through community contributions. It also provides full transparency into the functioning of the environment, which is critical for scientific accuracy and reproducibility in research. Every episode of interaction and learning is meticulously recorded, providing invaluable data that allows researchers to analyze and refine their algorithms.

Creating Your First Reinforcement Learning Model

As you embark on the adventure of building a reinforcement learning model, the OpenAI Gym environment serves as fertile ground for both practice and innovation. The journey begins with choosing an OpenAI Gym environment that fits your project goals. The range of difficulty levels and variety of challenges available ensure that the environment is well-suited for learners and experts looking to calibrate the complexity of their models.

After selecting the environment, the next critical step is to initialize your RL agent – the decision maker in the simulation. This entity will need to work by sensing the environment, taking action, and adapting through learning. OpenAI Gym encapsulates these elements in an iterative process that captures the essence of reinforcement learning.

For those starting their first RL model, defining an agent’s decision-making policy often starts with a simple table-based approach such as Q-learning. In this method, the agent develops a so-called Q-table – a matrix where each cell represents the estimated reward for performing a certain action in a certain state. With sufficient training, this table guides the agent to the best possible actions in the environment.

A key aspect of any reinforcement learning model is a feedback loop, where an agent observes the current state of the environment, chooses and performs an action, and is then rewarded or punished based on the outcome of that action. Through these rewards – or sometimes punishments – the agent learns the value of actions, refining its policies over successive iterations and learning episodes.

Now, there is a delicate craft involved in setting up effective reward signals to guide agent learning. Rewards should be designed to not only encourage the achievement of immediate goals (such as successfully balancing a pole on a cart), but also to direct action toward long-term goals (accumulating the greatest total reward over time). This concept of a reward strategy is fundamental in the learning process of an agent since poorly designed rewards can easily disrupt the learning process or lead to the development of suboptimal policies.

Training an RL agent requires a careful balance between learning the entire environment and using known paths to success. Initially, the emphasis is on intelligence, since the agent has minimal knowledge of the environment. Over time, as the agent accumulates more experience, the learned values in the Q-learning table or policy parameterization with more complex methods such as policy gradients become more reliable. Consequently, the agent begins to move into exploitation, relying on its accumulated knowledge to make decisions that are assumed to maximize reward.

Building your first RL model will take a lot of experimentation and learning. It is very important to observe the agent and understand why it makes certain decisions and how feedback from the environment shapes its behavior. This observation often involves trial and error, tuning learning parameters, reward mechanisms, or even the representation structure of the environment model.

Going Beyond Basic Models

The beginnings of any reinforcement learning model tend to lie in relatively simple algorithmic designs, but as skill and ambition grow, the need for more sophisticated techniques grows. Going beyond basic models often requires exploring the integration of deep learning, a subset of machine learning that uses multilayered neural networks to recognize and learn from high-dimensional data.

For those pushing the limits of what basic models can provide, incorporating neural networks into the reinforcement learning framework is a transformative step forward. Deep Q-networks (DQNs), which combine Q-learning with deep neural networks, have pioneered this. They allow agents to extrapolate and generalize from vast and complex state spaces where conventional Q-tables would be cumbersome or impossible to manage. DQNs have demonstrated their prowess, in particular, in mastering a variety of Atari games where the visual input from the screen is multidimensional, but the underlying neural network successfully distills this data into effective action policies.

Similarly, advanced models such as proximal policy optimization (PPO) and deep deterministic policy gradients (DDPG) represent important milestones in the field of RL, allowing agents to address continuous action spaces with finer control and stability. These models break free from the constraints of discrete action spaces, allowing smooth and fluid transitions in actions, especially important for tasks such as work or autonomous driving.

As the complexity of the model increases, it becomes increasingly difficult to strike a harmonious balance between exploring new strategies and using known paths to success. Advanced models should consider a strategy that provides sufficient variability of actions to avoid local maxima and find potentially better strategies. Innovations such as entropy maximization become crucial at this stage, encouraging the model to explore a more diverse range of possible actions.

Further advancement of reinforcement learning models also requires careful consideration of an important dilemma known as the “credit assignment problem.” When an agent receives a reward or punishment, it must determine which actions were most responsible for that outcome. In complex environments where rewards are rare or delayed, figuring out which decisions led to success or failure can be a non-trivial task. Advanced RL models use techniques such as fitness tracking or time differences (TD) to learn to overcome these problems, providing a way to more accurately assign credit to action sequences.

As the complexity of the models increases, so does the demand for resources, which requires significantly more computing power and memory. Training these complex neural networks, often consisting of millions of parameters, can be extremely resource-intensive and time-consuming. Using hardware accelerators such as graphics processing units (GPUs) or tensor processing units (TPUs) can significantly reduce training time, making the task of developing and iterating more complex models feasible.

The judicious use of such advanced hardware and complex models also emphasizes the importance of monitoring and fine-tuning. Hyperparameter tuning, model architecture decisions, and training procedures are becoming increasingly important, with the smallest adjustments potentially having a significant impact on performance.

As the subtleties of models are emphasized, developers and researchers are also tasked with implementing more vigorous testing and validation regimes to ensure that generalizability beyond academic settings is achieved. This rigor helps create RL models that are not only highly efficient on their training grounds but also robust and adaptable to a variety of scenarios, embracing the unpredictable nature of real-world applications.

Categories

- AI Education (39)

- AI in Business (65)

- AI Projects (87)

- Research (107)

- Uncategorized (5)

Other posts

- Medical Treatment in Brazil: Advanced Healthcare, Skilled Specialists, and Patient-Focused Care

- Dental Treatment in China: Modern Technology, Skilled Dentists, and Comprehensive Care for International Patients

- Plastic Surgery in China: Advanced Aesthetic Medicine Supported by Precision, Innovation, and Skilled Specialists

- Ophthalmology in China: Advanced Eye Care Guided by Innovation, Expertise, and Patient-Focused Treatment

- Finding Care, Calm, and Confidence: Why Patients Are Looking Toward Beroun in the Czech Republic

- Choosing Health, Energy, and a New Future: Exploring Gastric Bypass in Diyarbakır, Turkey

- When Facial Hair Tells Your Story: Considering a Beard Transplant in Phuket, Thailand

- When Prevention Becomes Power: Understanding Liver Cirrhosis Risk and Modern Screening Approaches in Spain

- When the Abdomen Signals Something Serious: Understanding Abdominal Aortic Aneurysm and Getting Expert Evaluation in Islamabad

- When Back Pain Becomes More Than “Just Pain”: Understanding the Need for Microdiscectomy

Newsletter

Get regular updates on data science, artificial intelligence, machine